Deterministic Optimal Economic Growth Model

Contents

Deterministic Optimal Economic Growth Model¶

Randall Romero Aguilar, PhD

This demo is based on the original Matlab demo accompanying the Computational Economics and Finance 2001 textbook by Mario Miranda and Paul Fackler.

Original (Matlab) CompEcon file: demdp06.m

Running this file requires the Python version of CompEcon. This can be installed with pip by running

!pip install compecon --upgrade

Last updated: 2022-Oct-23

About¶

Welfare maximizing social planner must decide how much society should consume and invest. Model is of special interest because it has a known closed-form solution.

States

s stock of wealth

Actions

k capital investment

Parameters

β capital production elasticity

δ discount factor

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from compecon import BasisChebyshev, DPmodel, DPoptions, qnwnorm

Model parameters¶

Assuming that the marginal productivity of capital is \(\beta=0.5\) and the discount factor is \(\delta=0.9\)

β, δ = 0.5, 0.9

Analytic results¶

The steady-state values for this model are

sstar = (β * δ) ** (β / (1 - β)) # steady-state wealth kstar = β * δ * sstar # steady-state capital investment vstar = np.log(sstar - kstar) / (1 - δ) # steady-state value pstar = 1 / (sstar * (1 - β * δ)) # steady-state shadow price b = 1 / (1 - δ * β)

print(‘\n\nSteady-State’) for var, value in zip([‘Wealth’,’Investment’,’Value’,’Shadow price’], [sstar,kstar,vstar,pstar]): print(f’\t{var:12s} = {value:8.4f}’)

sstar = (β * δ) ** (β / (1 - β)) # steady-state wealth

kstar = β * δ * sstar # steady-state capital investment

vstar = np.log(sstar - kstar) / (1 - δ) # steady-state value

pstar = 1 / (sstar * (1 - β * δ)) # steady-state shadow price

b = 1 / (1 - δ * β)

ss = pd.Series([sstar,kstar,vstar,pstar], index=['Wealth','Investment','Value','Shadow Price'])

ss

Wealth 0.450000

Investment 0.202500

Value -13.963447

Shadow Price 4.040404

dtype: float64

The true value function is

def vtrue(wealth): # analytic value function

return vstar + b * (np.log(wealth) - np.log(sstar))

The true policy function is

def ktrue(wealth): #analytic policy function

return δ*β*wealth

Numeric results¶

State space¶

The state variable is s=”Wealth”, which we restrict to \(0\in[0.2, 1.0]\).

Here, we represent it with a Chebyshev basis, with \(n=15\) nodes.

n, smin, smax = 15, 0.2, 1.0

basis = BasisChebyshev(n, smin, smax, labels=['Wealth'])

Action space¶

The choice variable k=”Investment” must be nonnegative.

def bounds(s, i=None, j=None):

return np.zeros_like(s), s[:]

Reward function¶

The reward function is the utility of consumption=\(s-k\).

def reward(s, k, i=None, j=None):

sk = s - k

u = np.log(sk)

ux= - sk ** -1

uxx = - sk ** -2

return u, ux, uxx

State transition function¶

Next period, wealth will be equal to production from available initial capital \(k\), that is \(s' = k^\beta\)

def transition(s, k, i=None, j=None, in_=None, e=None):

g = k ** β

gx = β * k **(β - 1)

gxx = (β - 1) * β * k ** (β - 2)

return g, gx, gxx

Model structure¶

The value of wealth \(s\) satisfies the Bellman equation

To solve and simulate this model,use the CompEcon class DPmodel

growth_model = DPmodel(basis, reward, transition, bounds,

x=['Investment'],

discount=δ)

Solving the model¶

Solving the growth model by collocation, using Newton algorithm and a maximum of 20 iterations

options = dict(show=True,

algorithm='newton',

maxit=20)

snodes = growth_model.Value.nodes

S = growth_model.solve(vtrue(snodes), ktrue(snodes), **options)

Solving infinite-horizon model collocation equation by Newton's method

iter change time

------------------------------

0 4.7e-07 0.0000

1 1.6e-12 0.0000

Elapsed Time = 0.00 Seconds

DPmodel.solve returns a pandas DataFrame with the following data:

S.head()

| Wealth | value | resid | Investment | |

|---|---|---|---|---|

| Wealth | ||||

| 0.200000 | 0.200000 | -15.437865 | 2.766030e-07 | 0.090000 |

| 0.205369 | 0.205369 | -15.389699 | -2.099164e-07 | 0.092416 |

| 0.210738 | 0.210738 | -15.342776 | -2.488242e-07 | 0.094832 |

| 0.216107 | 0.216107 | -15.297033 | -1.102768e-07 | 0.097248 |

| 0.221477 | 0.221477 | -15.252412 | 5.644805e-08 | 0.099664 |

We are also interested in the shadow price of wealth (the first derivative of the value function) and the approximation error.

To analyze the dynamics of the model, it also helps to compute the optimal change of wealth.

S['shadow price'] = growth_model.Value(S['Wealth'],1)

S['error'] = S['value'] - vtrue(S['Wealth'])

S['D.Wealth'] = transition(S['Wealth'], S['Investment'])[0] - S['Wealth']

S.head()

| Wealth | value | resid | Investment | shadow price | error | D.Wealth | |

|---|---|---|---|---|---|---|---|

| Wealth | |||||||

| 0.200000 | 0.200000 | -15.437865 | 2.766030e-07 | 0.090000 | 9.090758 | 4.241867e-07 | 0.100000 |

| 0.205369 | 0.205369 | -15.389699 | -2.099164e-07 | 0.092416 | 8.853206 | -3.099038e-08 | 0.098631 |

| 0.210738 | 0.210738 | -15.342776 | -2.488242e-07 | 0.094832 | 8.627699 | -4.010325e-08 | 0.097210 |

| 0.216107 | 0.216107 | -15.297033 | -1.102768e-07 | 0.097248 | 8.413362 | 1.253504e-07 | 0.095739 |

| 0.221477 | 0.221477 | -15.252412 | 5.644805e-08 | 0.099664 | 8.209399 | 3.151235e-07 | 0.094220 |

Solving the model by Linear-Quadratic Approximation¶

The DPmodel.lqapprox solves the linear-quadratic approximation, in this case arround the steady-state. It returns a LQmodel which works similar to the DPmodel object.

We also compute the shadow price and the approximation error to compare these results to the collocation results.

growth_lq = growth_model.lqapprox(sstar, kstar)

L = growth_lq.solution(basis.nodes)

L['shadow price'] = L['value_Wealth']

L['error'] = L['value'] - vtrue(L['Wealth'])

L['D.Wealth'] = L['Wealth_next']- L['Wealth']

L.head()

| Wealth | Investment | value | value_Wealth | Wealth_next | shadow price | error | D.Wealth | |

|---|---|---|---|---|---|---|---|---|

| Wealth | ||||||||

| 0.202191 | 0.202191 | -0.020528 | -15.014820 | 4.444953 | 0.202192 | 4.444953 | 0.403234 | 2.977733e-07 |

| 0.219577 | 0.219577 | -0.004880 | -14.937786 | 4.416570 | 0.219578 | 4.416570 | 0.330284 | 2.769166e-07 |

| 0.253590 | 0.253590 | 0.025731 | -14.788512 | 4.361045 | 0.253590 | 4.361045 | 0.217716 | 2.361148e-07 |

| 0.302742 | 0.302742 | 0.069968 | -14.576129 | 4.280804 | 0.302742 | 4.280804 | 0.107984 | 1.771510e-07 |

| 0.364886 | 0.364886 | 0.125897 | -14.313256 | 4.179353 | 0.364886 | 4.179353 | 0.031397 | 1.026024e-07 |

growth_lq.G

array([[1.]])

Plotting the results¶

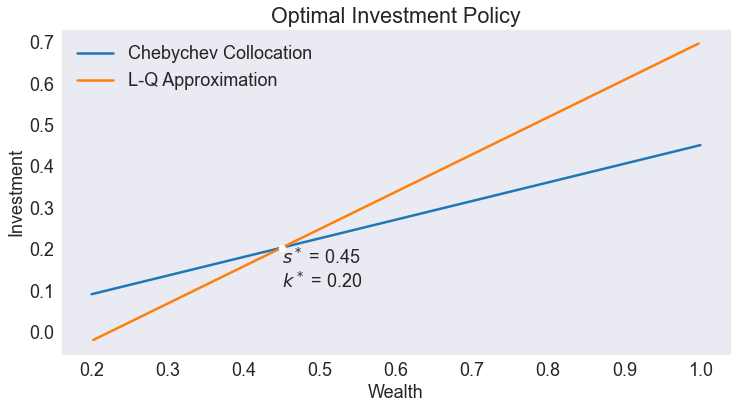

Optimal Policy¶

fig1, ax = plt.subplots()

ax.set(title='Optimal Investment Policy', xlabel='Wealth', ylabel='Investment')

ax.plot(S.Investment, label='Chebychev Collocation')

ax.plot(L.Investment, label='L-Q Approximation')

ax.plot(ss['Wealth'], ss['Investment'],'wo')

ax.annotate(f"$s^*$ = {ss['Wealth']:.2f}\n$k^*$ = {ss['Investment']:.2f}",ss[['Wealth', 'Investment']],va='top')

ax.legend(loc= 'upper left');

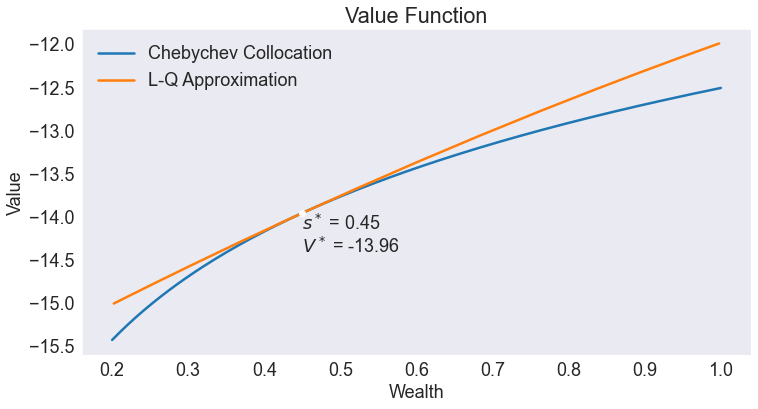

Value Function¶

fig2, ax = plt.subplots()

ax.set(title='Value Function', xlabel='Wealth', ylabel='Value')

ax.plot(S.value, label='Chebychev Collocation')

ax.plot(L.value, label='L-Q Approximation')

ax.plot(ss['Wealth'], ss['Value'],'wo')

ax.annotate(f"$s^*$ = {ss['Wealth']:.2f}\n$V^*$ = {ss['Value']:.2f}",ss[['Wealth', 'Value']],va='top')

#ax.annotate(sstar, vstar, f'$s^* = {sstar:.2f}$\n$V^* = {vstar:.2f}$', 'bo', (10, -17),ms=10)

ax.legend(loc= 'upper left');

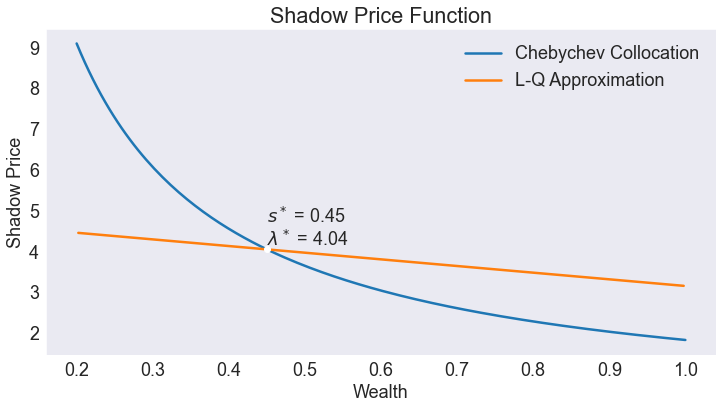

Shadow Price Function¶

fig3, ax = plt.subplots()

ax.set(title='Shadow Price Function', xlabel='Wealth', ylabel='Shadow Price')

ax.plot(S['shadow price'], label='Chebychev Collocation')

ax.plot(L['shadow price'], label='L-Q Approximation')

ax.plot(ss['Wealth'], ss['Shadow Price'],'wo')

ax.annotate(f"$s^*$ = {ss['Wealth']:.2f}\n$\lambda^*$ = {ss['Shadow Price']:.2f}",ss[['Wealth', 'Shadow Price']],va='bottom')

ax.legend(loc= 'upper right');

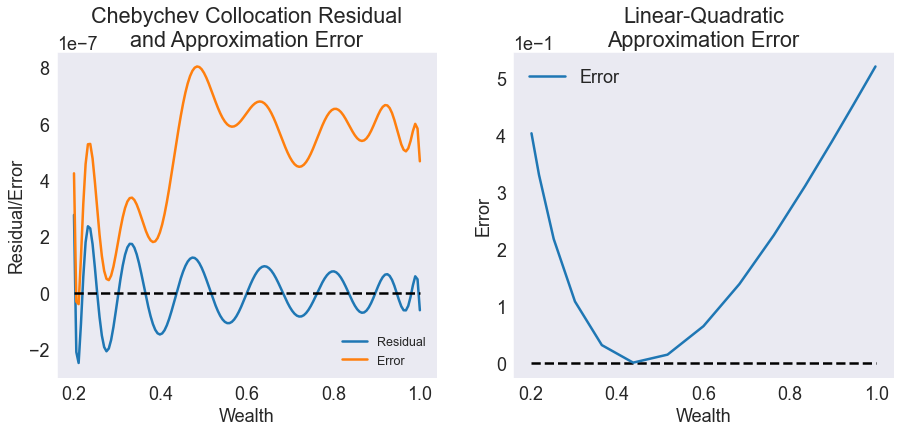

Chebychev Collocation Residual and Approximation Error vs. Linear-Quadratic Approximation Error¶

fig4, (ax0, ax1) = plt.subplots(1,2,figsize=[15, 6])

ax0.set(title='Chebychev Collocation Residual\nand Approximation Error', xlabel='Wealth', ylabel='Residual/Error')

ax0.plot(S[['resid', 'error']])

ax0.legend(['Residual','Error'], loc='lower right', fontsize='x-small')

ax0.hlines(0,smin,smax,'k',linestyles='--')

ax0.ticklabel_format(style='sci', axis='y', scilimits=(-1,1))

ax1.set(title='Linear-Quadratic\nApproximation Error', xlabel='Wealth', ylabel='Error')

ax1.hlines(0,smin,smax,'k',linestyles='--')

ax1.plot(L['error'], label='Error')

ax1.legend(loc='upper left')

ax1.ticklabel_format(style='sci', axis='y', scilimits=(-1,1))

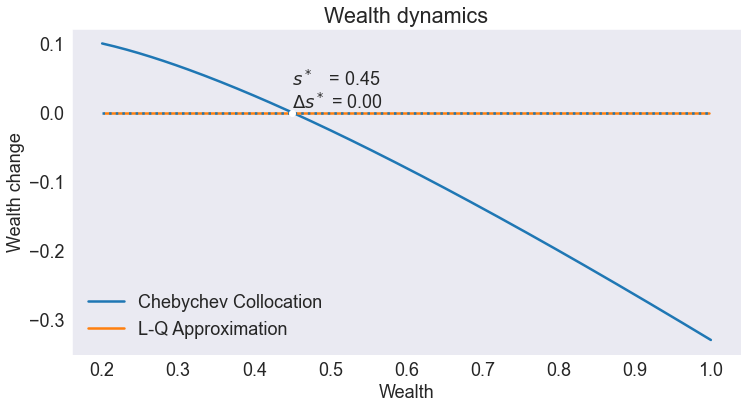

Wealth dynamics¶

Notice how the steady-state is stable in the Chebyshev collocation solution, but unstable in the linear-quadratic approximation. In particular, simulated paths of wealth in the L-Q approximation will converge to zero, unless the initial states is within a small neighborhood of the steady-state.

fig5, ax = plt.subplots()

ax.set(title='Wealth dynamics', xlabel='Wealth', ylabel='Wealth change')

ax.plot(S['D.Wealth'], label='Chebychev Collocation')

ax.plot(L['D.Wealth'], label='L-Q Approximation')

ax.hlines(0,smin,smax,linestyles=':')

ax.plot(ss['Wealth'], 0, 'wo')

ax.annotate(f"$s^*$ = {ss['Wealth']:.2f}\n$\Delta s^*$ = 0.00",[ss['Wealth'], 0], va='bottom')

plt.legend(loc= 'lower left');

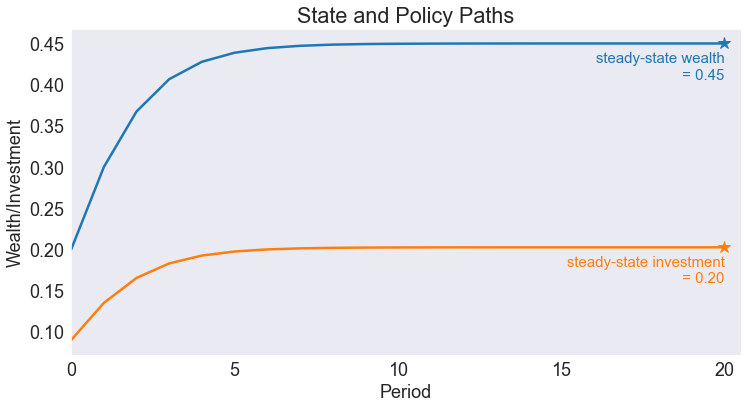

Simulating the model¶

We simulate 20 periods of the model starting from \(s=s_{\min}\)

T = 20

data = growth_model.simulate(T, smin)

Simulated State and Policy Paths¶

opts = dict(ha='right', va='top', fontsize='small')

fig6, ax = plt.subplots()

ax.set(title='State and Policy Paths',

xlabel='Period',

ylabel='Wealth/Investment',

xlim=[0, T + 0.5],

xticks=range(0,T+1,5))

ax.plot(data[['Wealth', 'Investment']])

ax.plot(T, sstar, color='C0', marker='*', ms=12)

ax.annotate(f"steady-state wealth\n = {sstar:.2f}", [T, sstar-0.01], color='C0', **opts)

ax.plot(T, kstar, color='C1', marker='*', ms=12)

ax.annotate(f"steady-state investment\n = {kstar:.2f}", [T, kstar-0.01], color='C1', **opts);